By Andy Minuth and Daniele Siragusano of FilmLight

Click this link to download a PDF copy of this article.

This article is part 1 of a three-part series on modern broadcast workflows. You can find the other two parts of this series by following these links:

Part 2: IT in modern broadcast

Part 3: Collaboration in modern post-production

Introduction

A lot has happened in the post-production environment in recent years. High dynamic range (HDR) output, the Academy Color Encoding System (ACES) and comparable workflows are now standard in many areas. Broadcast environments, however, have always posed unique challenges for post-production, with an emphasis on creating uniform, stable and fast workflows. In addition to other developments, the increasing demand for HDR content requires a rethink of these workflows.

How would you design a state-of-the-art post-production workflow for broadcast today? Public broadcaster SWR in Germany faced this challenge in 2021. The plan was to move the digital colour correction department to completely new premises and modernise the workflows at the same time. At FilmLight, the manufacturer of the Baselight colour grading system, we’re proud to have supported SWR to realise these ambitions.

In this three-part series, we take you through the different aspects of this project. We begin by focusing on colour workflows, examining the change in approach that was implemented at SWR – and which offers opportunities to broadcasters worldwide.

A suite in SWR’s new colour correction department in Baden Baden, where SDR and HDR are mastered. In addition to the HDR reference monitor, there is also a larger HDR OLED consumer display, which is matched to the reference display within the scope of the technical possibilities.

Colour pipeline

The classic ‘video’ colour pipeline for TV broadcast was quite simple. Input, working and output colour spaces were identical, determined by the characteristics of the output display:

- 4 Gamma EOTF

- BT.709 colour primaries

This standard HD colour space is often called ‘Rec.709’. However, in the following series, we will refer to it as ‘Rec.1886’ or ‘SDR’, for standard dynamic range.

The HDR challenge

HDR poses a particular challenge in the broadcast environment, as grading for Rec.1886 has mainly been carried out using a display-referred approach. This means that the colourist directly manipulates the video signal on the monitor.

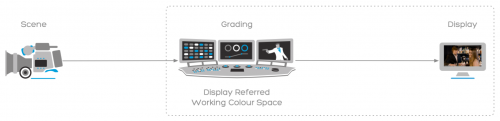

Display-referred grading – often the status quo where the colourist works directly in the display colour space and which does not necessarily require colour management. HDR processing is not ideal in this approach.

Relatively simple tools such as lift, gamma, gain and so on, originating from analogue image processing, were developed for this purpose. However, if you work display-referred on a standard dynamic range monitor, the colour correction is only valid for this specific dynamic range.

Colour management can convert the image to HDR colourimetrically, but it would look identical to the SDR version and not take advantage of the opportunities of HDR’s larger canvas. Unfortunately, a slightly naïve approach can be taken to fixing this issue: ‘bending up’ the corrected SDR image to HDR with a manual correction.

This approach is less than ideal not only from a creative point of view, but also technically. You risk spreading tonal values too hard and generating undesirable colour shifts at the same time. On top of this, compression artefacts can become noticeable and in the end you will be limited by how you can use the HDR images, because they will no longer be appealing or acceptable.

An alternative approach would be to simply colour correct for HDR in a display-referred HDR colour space – similar to the legacy video workflow but in HDR.

Display-referred colour spaces such as ST.2084 ‘PQ’ and HLG[1] are well suited to encode colour-corrected HDR images efficiently. However, in practice, they have proven to be less suitable as working colour spaces and intermediate formats for colour grading.

Traditional grading operations do not work uniformly in HLG. For instance, with some tools, you can see a different reaction in the highlights than in the shadows. Furthermore, PQ is unsuitable for encoding unprocessed camera data because values below nominal black are not encoded. This affects the behaviour of camera noise.

Another challenge in the daily production environment is the diverse array of display properties. Today, you must serve multiple target formats at the same time. You fundamentally steer into a dead end with display-referred workflows because colour correction is optimised only for a single display. Even the exchange with VFX and editorial cannot be achieved with decent image quality when grading in this way.

Another approach to serving HDR and SDR is to use the backward compatibility of HLG. HLG’s transfer curve has been engineered to produce an acceptable image on Rec.1886 monitors with BT.2020 colour primaries. However, before sending an HLG image to a Rec.1886 display with BT.709 primaries, you still must convert the primaries from BT.2020 to BT.709. The backwards compatibility works well in principle, but the authors of the images (directors of photography and colourists) are usually not entirely satisfied with the result. And they cannot easily influence the outcome in SDR without affecting the HDR correction. Therefore, as long as Rec.1886 standard dynamic range is a critical or dominant output format, this option is not ideal. If you’re using this approach, and the look in SDR is important, a dedicated master should be created. The benefit from the backward compatibility of HLG will only be fully realised when SDR has lost relevance.

Scene-referred grading

So what is the best way to handle both standard and high dynamic range? The solution originates from analogue photography and has long been used in VFX and cinema post-production: scene-referred colour grading, as opposed to display-referred grading. Creating and compositing visual effects in scene-linear has been common practice for at least two decades. This is because the natural behaviour of light can be reproduced much better in a linear scene-referred environment, which brings significant advantages to VFX work. In digital colour correction, scene-referred working has also been popular since the advent of DI (Digital Intermediate). Scene-referred means that the colour values we manipulate relate directly to the scene.

Let’s look at it more abstractly. In display-referred grading, we were grading the light emitted from a monitor. But now we are manipulating the light in the scene that falls into the camera. One of the great advantages of this method is that you can change the virtual camera with which you ‘film’ the scene dynamically.

For example, we can correct a scene towards warm colours while we evaluate the image through a virtual ‘SDR camera’ on a Rec.1886 monitor. If we now output this scene with an ‘HDR camera’, it will also look plausible on an HDR monitor – with both the warm correction and the high contrast range of the monitor.

Since the colour correction is scene-referred, it is possible to switch dynamically between standard and high dynamic range output at any time. This brings great flexibility to the workflow. Depending on the project, SDR can be handled first, HDR first or both alternately. For example, the colourist can define the look in HDR but continue working in the SDR suite for shot-by-shot matching, before returning to HDR to apply the finishing touches for the HDR deliverables.

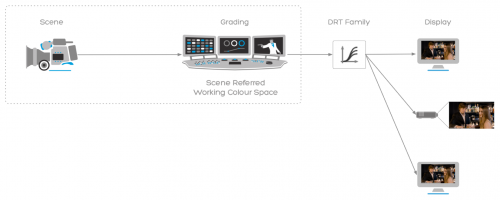

Scene-referred grading. The colourist works in the scene and not in the display, which brings flexibility on the output side amongst other advantages.

The working colour space is scene-referred, typically a logarithmic colour space for colour correction and a linear (or scene-linear) colour space for VFX. From this scene-referred working colour space, a display rendering transform (DRT) converts automatically into the currently required display colour space. This means that a colour space conversion occurs downstream of the colour correction.

Scene-referred workflows are often combined with colour management – known as colour-managed workflows. Colour management means that the software is aware of the momentary colour space of the image at every point in the chain. The software must understand that, for example, you are currently working with S-Log3 source images and that the connected monitor expects an HLG signal. Based on this information, the software automatically adapts the respective colour transformations so that the user always sees a plausible result. These transformations can be applied via look-up tables (LUTs), but applying formulas directly on the GPU is better, as they do not clip the signal and are much more precise. And thanks to colour management the adoption of new camera formats, such as the recent ARRI LogC4 format, is fairly easy. The software picks up the metadata from the camera file automatically and the grading tools behave as expected by the colourist.

On the output side, the user still needs to tell the grading application which display or render colour space is currently required[2]. Usually, there is one house standard for high and one for standard dynamic range. The colourist can choose the HDR flavour of the grading displays because it does not depend on the HDR flavour of the deliverables. With the help of colour management, the software always encodes the ‘display light’ for the selected display parameters. If the input parameters of the display are adjusted accordingly, an HLG picture with Rec.BT.2020 primaries, for example, looks the same as an ST.2084 PQ encoded image with P3[3] primaries. The colourist can then decide in which output colour space the measurement instruments (like the RGB Parade scope) are most helpful.

On the input side, the grading software can automatically read the correct input colour space from the camera metadata, as long as it has not been lost during transcoding. We will cover this topic in more detail in part two of this three-part series, where we focus on collaboration in modern broadcast post-production.

There are many advantages to knowing the exact recording colour space of the camera data. And the benefits of scene-referred recording, for example, in a log flavour, can be fully realised only with scene-referred grading.

An anti-example: If you apply a V-Log to Rec.1886 conversion LUT directly to V-Log material as a first step and continue working downstream on it, you could have just shot in Rec.709. This means that the more powerful manipulation possibilities that the logarithmic image offers are not being utilised.

The DRT achieves SDR and HDR output through global mapping. A correction made in HDR looks plausible in SDR but can often be creatively optimised. Grading software cannot (yet) read scripts, so it does not know whether the information in the overexposed window is relevant or unimportant to the story. It is therefore common for colourists to make a dedicated trim pass for all critical output formats, but this is not mandatory. With the decreasing relevance of SDR, this will most likely become less important in the future. The colour correction department can output an SDR file via the global DRT mapping, for example, for sound mixing or subtitling, at any time, even for HDR productions, without additional effort.

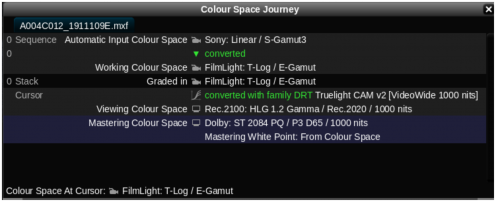

A typical colour space journey in a scene-referred workflow. The grading software automatically sets the input colour space based on the clip metadata. It is crucial that the working colour space uses a scene-referred encoding and that the viewing colour space matches the display parameters.

If you want to work scene-referred, you must decide on a specific colour management workflow. ACES is the most widely used solution, like the lowest common denominator that almost all software supports. The Academy has set important milestones with ACES and launched a worldwide movement. Camera manufacturers ARRI and RED also offer colour pipelines containing HDR and SDR DRTs: ARRI REVEAL (Legacy Alexa: ALF-2) and RED IPP2, respectively. However, these are optimised for the respective cameras and are not recommended for all types of camera footage.

There are also native colour management workflows within grading systems. Truelight-CAM, or T-CAM, is an option available in the Baselight colour grading system. T-CAM is founded on the same ideas as ACES and has been optimised by FilmLight based on feedback from users around the globe. FilmLight’s engineers put particular emphasis on look development flexibility, grading tool behaviour, minimisation of image artefacts and visual consistency between HDR and SDR. In the modernisation of the SWR colour correction process the needs of colourists were a priority, and T-CAM was chosen as the standard DRT.

Colourists who are used to working display-referred must transition to the scene-referred way of working. While this is a necessary step that many colourists have already taken in recent years, one should not expect this shift to happen overnight and without challenges. Ideally, there should be a sufficiently long transition period during which work can be carried out in a hybrid workflow such as that available in Baselight.

The colourists at SWR, for example, were trained by FilmLight on scene-referred grading. The hybrid workflow was also explained, in which scene-referred grading is preferred, but colourists could switch to their previous methods of display-referred grading at any time. As long as HDR is not yet delivered, colourists can gain vital experience in scene-referred correction on real projects. Ideally, users have a contact person who is particularly familiar with this topic to provide advice and tips. At SWR, FilmLight accompanies the team with further training and is directly available for questions and problems.

Re-mastering archive material for HDR

Broadcasters hold vast amounts of legacy archive footage and, with the introduction of HDR, source material often has to be converted from SDR to HDR. There may only be a few shots, but sometimes there are longer segments or entire programmes. Almost all solutions on the market try to upconvert the SDR signal to HDR with an optimised curve, which means that the software applies the same curve to all pixels of an image. Existing image artefacts, such as macroblocks caused by lossy DCT compression, are then amplified, significantly limiting the possibilities of utilising the HDR range.

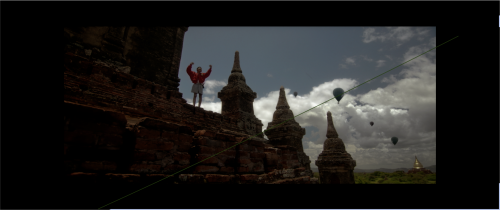

A better method is to use spatial conversion, which means the conversion is dynamically based on the surrounding pixels. This technology is employed when converting from SDR to HDR using the Boost Range tool in Baselight. As a result, noise and sharp edges of macroblocks, sharpening overshoots or signal clipping are not amplified. The result is more appealing HDR images that exploit the possibilities of HDR and provide a natural appearance.

Boost Range stretches the image content from SDR to HDR without revealing DCT compression artefacts. This creates a natural appeal in images without artificial over-sharpening.

Film scanner integration

Sometimes existing film scanners need to be integrated into the new infrastructure, and this was the case with SWR. The challenge here is how to integrate film scans into scene-referred colour management in the best way possible. Many users rely on Academy Density Exchange (ADX) as a bridge from the scanner to the colour management. However, some users are unsatisfied with this solution, as scanner manufacturers often do not optimise their output images for ADX. In addition to ADX, Baselight offers another input colour space specifically optimised for generic Cineon scans, usually with better colour reproduction.

Film scans can never be as accurate as scene-referred footage from a modern digital camera. There are simply too many variables, such as the type and age of the film stock, as well as the scanner type and scan parameters. All of this affects colour reproduction. However, the generic Cineon input colour space is robust against all of these extremes and has proven well in the field.

We discuss solutions regarding image restoration of archival footage in more detail in the final part of this three-part series, on IT in modern broadcast post-production.

Conclusion

The transition to scene-referred grading may seem complex, but it is a very sustainable step. Should a display colour space or the distribution path change in the near or distant future, it will have no impact on the workflow during colour grading; you simply render into a different or additional output format. The grading tools feel the same to the colourist, even if the peak brightness, the EOTF or the display primaries change, or if new camera colour spaces are introduced. If a project is started in SDR and an HDR version is spontaneously requested, then you have not wasted any labour.

Therefore, with a scene-referred working style, you are covered for all eventualities – the risk of betting on the wrong horse and, in the worst case, having to change the workflow again after a few years can be ruled out.

Keep an eye out for parts two and three of this colour series, where we will look at collaboration with other departments and other optimisations in the broadcast environment. We show how metadata can increase efficiency and how recurring manual steps can be automated, leading to fewer errors and faster production.

About the authors

Andy Minuth started a career as a colourist at CinePostproduction in Munich after graduating with a Bachelor of Engineering degree in Audiovisual Media in 2008. After also working as head of the colour department at 1000 Volt in Istanbul, he joined FilmLight in 2017. As a colour workflow specialist, he is responsible for training, consulting and supporting customers and is also involved in the development of new features in Baselight.

After completing his Master of Arts in Electronic Media, Daniele Siragusano worked for almost five years at CinePostproduction in Munich, where he advanced to become the technical director of post-production. In 2014, Daniele joined FilmLight as a workflow specialist and image engineer. Since then, he has been deeply involved in developing HDR grading and colour management tools within Baselight.

Endnotes

[1] Hybrid colour space of logarithm and power (gamma) functions. The authors are aware that HLG is advertised as a scene-referred colour space. In actual practice, however, it is used in post-production as a display-referred colour space.

[2] Various metadata standards are currently being defined for SDI, HDMI and IP-based video formats, so in future the grading system will be able to configure the monitor.

[3] DCI-P3 D65 – SMPTE EG 432-1:2010